ICRA 2025 BARN Challenge

Wondering how the state-of-the-art navigation systems work on autonomous navigation in highly-constrained spaces? Check out our detailed report on the results and findings of The BARN Challenge at ICRA 2025 here!

Congrats RRSL, RobotiXX, and UVA AMR for winning the 1st, 2nd, and 3rd place of The BARN Challenge at ICRA 2025! See you next year!

About

Designing autonomous robot navigation systems has been a topic of interest to the robotics community for decades. Indeed, many existing navigation systems allow robots to move from one point to another in a collision-free manner, which may create the impression that navigation is a solved problem. However, autonomous mobile robots still struggle in many scenarios, e.g., colliding with or getting stuck in novel and tightly constrained spaces. These troublesome scenarios have many real-world implications, such as poor navigation performance in adversarial search and rescue environments, in naturally cluttered daily households, and in congested social spaces such as classrooms, offices, and cafeterias. Meeting these real-world challenges requires systems that can both successfully and efficiently navigate the environment with confidence, posing fundamental challenges to current autonomous systems, from perception to control. Therefore, the Benchmark Autonomous Robot Navigation (BARN) Challenge aims at creating a benchmark for state-of-the-art navigation systems and pushing the boundaries of their performance in these challenging and highly constrained environments.

At ICRA 2024, The Third BARN Challenge in Yokohama had six teams competed in the simulation competition and top four teams in the physical finals. We congratulate LiCS-KI, MLDA_EEE, and AIMS for winning the 1st, 2nd, and 3rd place of The BARN Challenge at ICRA 2024. We published the results and findings about how the state-of-the-art navigation systems work in highly-constrained spaces in IEEE Robotics and Automation Magazine.

At ICRA 2023, we successfully continued with our second BARN Challenge in London. Ten teams competed in the simulation competition and the top six teams advanced into the physical finals. We congratulate KUL+FM, INVENTEC, and University of Almeria for winning the 1st, 2nd, and 3rd place of The BARN Challenge at ICRA 2023. We published the results and findings about how the state-of-the-art navigation systems work in highly-constrained spaces in IEEE Robotics and Automation Magazine.

At ICRA 2022, we successfully launched the first BARN Challenge in Philadelphia. Five teams competed in the simulation competition and the top three teams advanced into the physical finals. We congratulate UT AMRL, UVA AMR, and Temple TRAIL for winning the 1st, 2nd, and 3rd place of The BARN Challenge at ICRA 2022. We published the results and findings about how the state-of-the-art navigation systems work in highly-constrained spaces in IEEE Robotics and Automation Magazine.

The Challenge

The BARN Challenge will take place primarily on the simulated BARN dataset and also physically at the conference venue in Yokohama.

The BARN dataset comprises 300 pre-generated navigation environments, ranging from easy open spaces to difficult highly constrained ones, and an environment generator that can generate novel BARN environments. The task is to navigate a Clearpath Jackal robot from a predefined start to a goal location as quickly as possible without any collision. The Jackal robot will be standardized with a 2D LiDAR, a motor controller with a max speed of 2m/s, and appropriate computational resources. Participants will need to develop navigation systems which consume the standardized LiDAR input, run all computation onboard using the provided resources, and output motion commands to drive the motors. Participants are welcome to use any approaches to tackle the navigation problem, such as using classical sampling-based or optimization-based planners, end-to-end learning, or hybrid approaches. The following infrastructure will be provided by the competition organizers:

- The 300 pre-generated BARN environments

- The BARN environment generator to generate novel environments

- Baseline navigation systems including classical (default DWA, fast DWA, and E-Band), end-to-end learning (e2e RL and LfLH), and hybrid (APPLR) approaches

- A training pipeline running the standardized Jackal robot in Gazebo simulation with Robot Operating System (ROS) Melodic (in Ubuntu 18.04), with the option of being containerized in Docker or Singularity containers for fast and standardized setup and evaluation

- A standardized evaluation pipeline to compete against other navigation systems

Competition Rules

During the competition, another 50 new BARN evaluation environments will be generated, which will not be accessible to the public. Each participating team is required to submit the developed navigation system as a (collection of) launchable ROS node(s). The final performance will be evaluated based on a standardized metric that considers navigation success rate (collision or not reaching the goal count as failure), actual traversal time, and environment difficulty (measured by optimal traversal time). Specially, the score $s$ for navigating each environment $i$ will be computed as

where the indicator $1^{\textrm{success}}$ is set to $1$ if the robot reaches the navigation goal without any collisions, and set to $0$ otherwise. AT denotes the actual traversal time, while OT denotes the optimal traversal time, as an indicator of the environment difficulty and measured by the shortest traversal time assuming the robot always travels at its maximum speed ($2\textrm{m/s}$):

The Path Length is provided by the BARN dataset based on Dijkstra's search. The clip function clips $\textrm{AT}_i$ within $2\textrm{OT}_i$ and $8\textrm{OT}_i$, in order to assure navigating extremely quickly or slowly in easy or difficult environments won't disproportionally scale the score. Notice that we changed the upper performance bound $4\textrm{OT}_i$ from the past two years to $2\textrm{OT}_i$ this year, considering the improving navigation performance. The overall score of each team is the score averaged over all 50 test BARN environments, with 10 trials in each environment. Higher scores indicate better navigation performance.

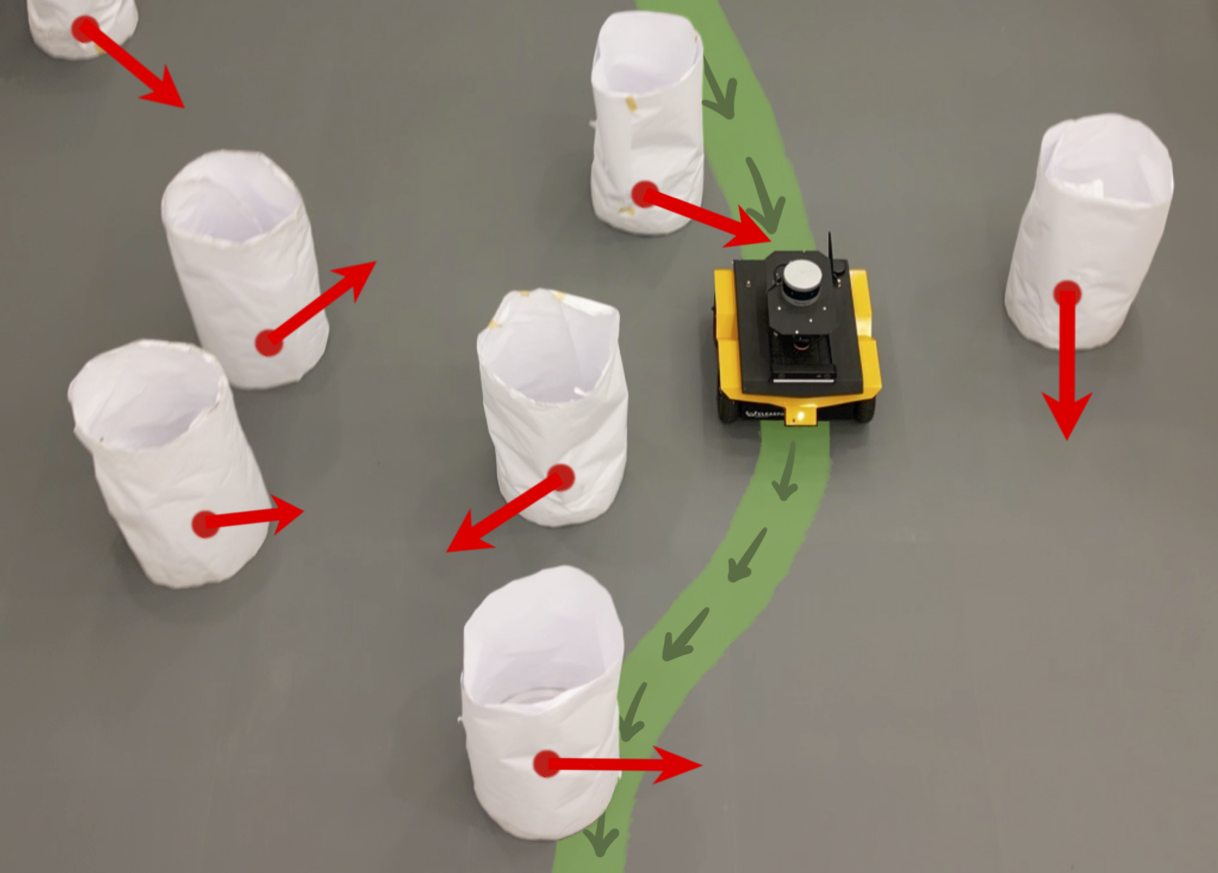

During the in-person conference in London, physical obstacle courses will be set up at the conference venue using cardboard boxes (see example below). A physical Clearpath Jackal robot will be provided by the competition sponsor, Clearpath Robotics, with the same standard sensor and actuator suites as in the simulation. However, a sim-to-real gap may still exist due to factors such as friction coefficient between physical wheels and venue floor, electric motor impedance and resistance, real-world sensor noise distribution, etc. The top three teams will be invited to compete in the physical competition. In case a team is not able to attend the conference in person, the organizers will run their submitted navigation stack on their behalf on the physical robot at the conference. The team who achieves the highest collision-free navigation success rate and shortest traversal time wins the competition.

Due to the overhead of the simulator in the simulation phase, we do not specifically constrain computation. We will use a computer with Intel Xeon Gold 6342 CPU @ 2.80 GHz to evaluate all submissions. However, during the physical competition, robot onboard computation will be limited to an Intel i3 CPU (i3-9100TE or similar) with 16GB of DDR4 RAM. GPUs are not available considering the small footprint of the LiDAR perception data.

Starting this year, we will introduce dynamic obstacles in our evaluation pipeline, both in the DynaBARN simulation environments and in physical test courses with iRobot Create robots acting as dynamic obstacles. As the first competition with dynamic obstacles, we will only reward good performance when facing dynamic obstacles in terms of extra bonuses, but not penalize poor performance. More details to come soon.

Leaderboard

Real World

2025

| Ranking | Team | Success / Total Trials | Code Link |

|---|---|---|---|

| 1 | RRSL | 7/9 | GitHub |

| 2 | RobotiXX | 3/9 | GitHub |

| 3 | UVA AMR | 1/9 | GitHub |

2024

| Ranking | Team | Success / Total Trials | Code Link |

|---|---|---|---|

| 1 | LiCS-KI | 6/9 | GitHub |

| 2 | MLDA_EEE | 5/9 (78.8s) | GitHub |

| 3 | AIMS | 5/9 (108.8s) | GitHub |

2023

| Ranking | Team | Success / Total Trials | Code Link |

|---|---|---|---|

| 1 | KUL+FM | 9/9 | GitHub |

| 2 | INVENTEC | 6/9 | GitHub |

| 3 | University of Almeria | 5/9 | GitHub |

2022

| Ranking | Team | Success / Total Trials | Code Link |

|---|---|---|---|

| 1 | UT AMRL | 8/9 | GitHub |

| 2 | UVA AMR | 4/9 | GitHub |

| 3 | Temple TRAIL | 2/9 | GitHub |

Simulation

The scores and ranking have been updated using the new scoring metric (previous $4\textrm{OT}_i$ vs. current $2\textrm{OT}_i$, moving the previous performance upper bound from $0.25$ to the current $0.5$). See The BARN Challenge 2023 Leaderboard for the previous scores using the old metric.

| Ranking | Team | BARN (DynaBARN) Score | Comment |

|---|---|---|---|

| 1 | FSMT | 0.4878 (0.0000) | NA |

| 2 | RobotiXX | 0.4873 (0.1042) | George Mason University |

| 3 | LiCS-KI24 | 0.4762 | Korea Advanced Institute of Science and Technology |

| 4 | EW-Glab | 0.4736 (0.0000) | Ewha Womans University |

| 5 | AIMS24 | 0.4723 | The Hong Kong Polytechnic University |

| 6 | KUL+FM23 | 0.4641 | KU Leuven & Flanders Make |

| 7 | LiCS-KI | 0.4611 (0.1125) | Korea Advanced Institute of Science and Technology |

| 8 | UT AMRL22 | 0.4594 | The University of Texas at Austin |

| 9 | MDP-DWA | 0.4525 (0.0000) | Univerisity of Maryland |

| 10 | MLDA-NTU | 0.4510 (0.0201) | Nanyang Technological University, Singapore |

| 11 | University of Almeria23 | 0.4460 | University of Almeria & Teladoc |

| 12 | LfLHb | 0.4354 | Details |

| 13 | INVENTEC23 | 0.4206 | Inventec Corporation |

| 14 | e2eb | 0.4083 | Details |

| 15 | Temple TRAIL22 | 0.4039 | Temple University |

| 16 | EIT-NUS24 | 0.3795 | Eastern Institute of Technology, Ningbo |

| 17 | Staxel23 | 0.3710 | Staxel |

| 18 | RRSL | 0.3558 (0.0001) | Michigan Technological University |

| 19 | UVA AMR | 0.3475 (0.0000) | University of Virginia |

| 20 | UVA AMR23 | 0.3475 | University of Virginia |

| 21 | UVA AMR22 | 0.3256 | University of Virginia |

| 22 | APPLR-DWAb | 0.3052 | Details |

| 23 | E-Bandb | 0.2832 | Details |

| 24 | MLDA_EEE24 | 0.2476 | Nanyang Technological University, Singapore |

| 25 | Yiyuiii22 | 0.2376 | Nanjing University |

| 26 | The MECO Barners23 | 0.2214 | KU Leuven |

| 27 | Fast DWAb | 0.2047 | Details |

| 28 | NavBot22 | 0.1733 | Indian Institute of Science, Bangalore |

| 29 | Default DWAb | 0.1656 | Details |

| 30 | UT AMRL23 | Missing | The University of Texas at Austin |

| 31 | Temple TRAIL23 | Missing | Temple University |

| 32 | RIL23 | Missing | Indian Institute of Science, Bengaluru |

| 33 | Armans Team | NA | Pabna University of Science and Technology |

| 34 | Lumnonicity23 | NA | AICoE, Jio |

| 35 | Tartu Team24 | NA | University of Tartu |

| 36 | CCWSS24 | NA | Wuhan University of Science and Technology |

24 denotes 2024 participants.

23 denotes 2023 participants.

22 denotes 2022 participants.

b denotes baselines.

Last updated: February 17 2025. If you do not see your submission, it is still under evaluation.

Participation

We have standardized the entire navigation pipeline using the BARN dataset in a standardized Singularity container. You should only modify the navigation system, and leave other parts intact. You can develop your navigation system in your local environment or in a container. You will need to upload your code (e.g., to GitHub) and submit a Singularityfile.def file which will access your code. We will build your Singularity container using the Singularityfile.def file you submitted, run the built container for evaluation, and then publish your score on our website. More detailed instructions can be found here.Please use this Submission Form to submit your navigation stack to be evaluated by our standardized evaluation pipeline.

Citing BARN

If you find BARN useful for your research, please cite the following paper:

title = {Benchmarking Metric Ground Navigation},

author = {Perille, Daniel and Truong, Abigail and Xiao, Xuesu and Stone, Peter},

booktitle = {2020 IEEE International Symposium on Safety, Security and Rescue Robotics (SSRR)},

year = {2020},

organization = {IEEE}

}

Schedule

| Date | Event |

|---|---|

| January 1 2025 | Online submission open |

| April 1 2025 | Soft Submission Deadline1 |

| April 15 2025 | 1st Round Physical Competition Invitations |

| May 1 2025 | Hard Submission Deadline2 |

| May 8 2025 | 2nd Round Physical Competition Invitations |

| May 20 2025 9a-6pm | Team Set-up |

| May 21 2025 9a-noon | Physical Competition Obstacle Course 1 |

| May 21 2025 1p-4p | Physical Competition Obstacle Course 2 |

| May 22 2025 9a-noon | Physical Competition Obstacle Course 3 |

| May 22 2025 1p-2p | Award Ceremony and Open Discussions |

2Deadline to participate in the simulation competition. However, final physical competition eligibility will depend on the available capacity and travel arrangement made beforehand.

Organizers

Links

- BARN Dataset

- BARN Paper

- BARN Video

- BARN Presentation at SSRR 2020

- Source Code to Customize BARN

- Source Code to Run Simulation Trials

- Example Simulation Trial Results

- The BARN Challenge 2024 Report

- The BARN Challenge 2023 Report

- The BARN Challenge 2022 Report

Contact

For questions, please contact:

Dr. Xuesu Xiao

Assistant Professor

Department of Computer Science

George Mason University

4400 University Drive, Fairfax, VA 22030, USA

xiao@gmu.edu