Verti-Wheelers

[Paper] [Video] [Datasets] [Code]

About

Most conventional wheeled robots can only move in flat environments and simply divide their planar workspaces into free spaces and obstacles. Deeming obstacles as non-traversable significantly limits wheeled robots’ mobility in real-world, non-flat, off-road environments, where part of the terrain (e.g., steep slopes, rugged boulders) will be treated as non-traversable obstacles. To improve wheeled mobility in those non-flat environments with vertically challenging terrain, we present two wheeled platforms with little hardware modification compared to conventional wheeled robots; we collect datasets of our wheeled robots crawling over previously non-traversable, vertically challenging terrain to facilitate data-driven mobility; we also present algorithms and their experimental results to show that conventional wheeled robots have previously unrealized potential of moving through vertically challenging terrain. We make our platforms, datasets, and algorithms publicly available to facilitate future research on wheeled mobility.

Platforms

Considering that most existing mobile robots are wheeled, our goal is to equip these easily available wheeled platforms with no or little specialized hardware modification. To assure it is physically feasible for wheeled platforms to move through vertically challenging terrain, we identify the following seven desiderata for their hardware:

- All-Wheel Drive (D1)

- Independent Suspensions (D2)

- Differential Lock (D3)

- Low/High Gear (D4)

- Wheel Speed Sensing (D5)

- Ground Speed Sensing (D6)

- Actuated Perception (D7)

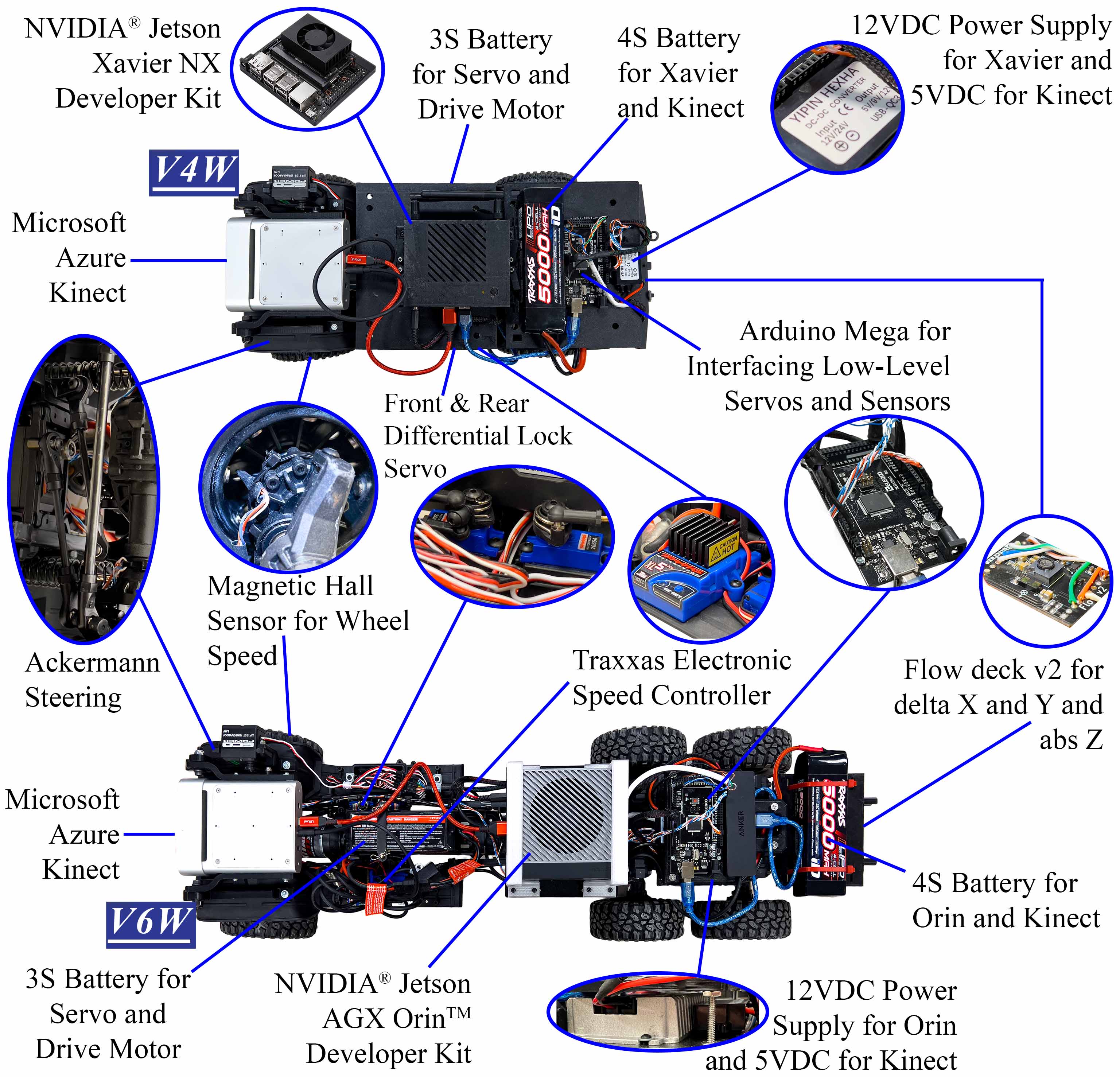

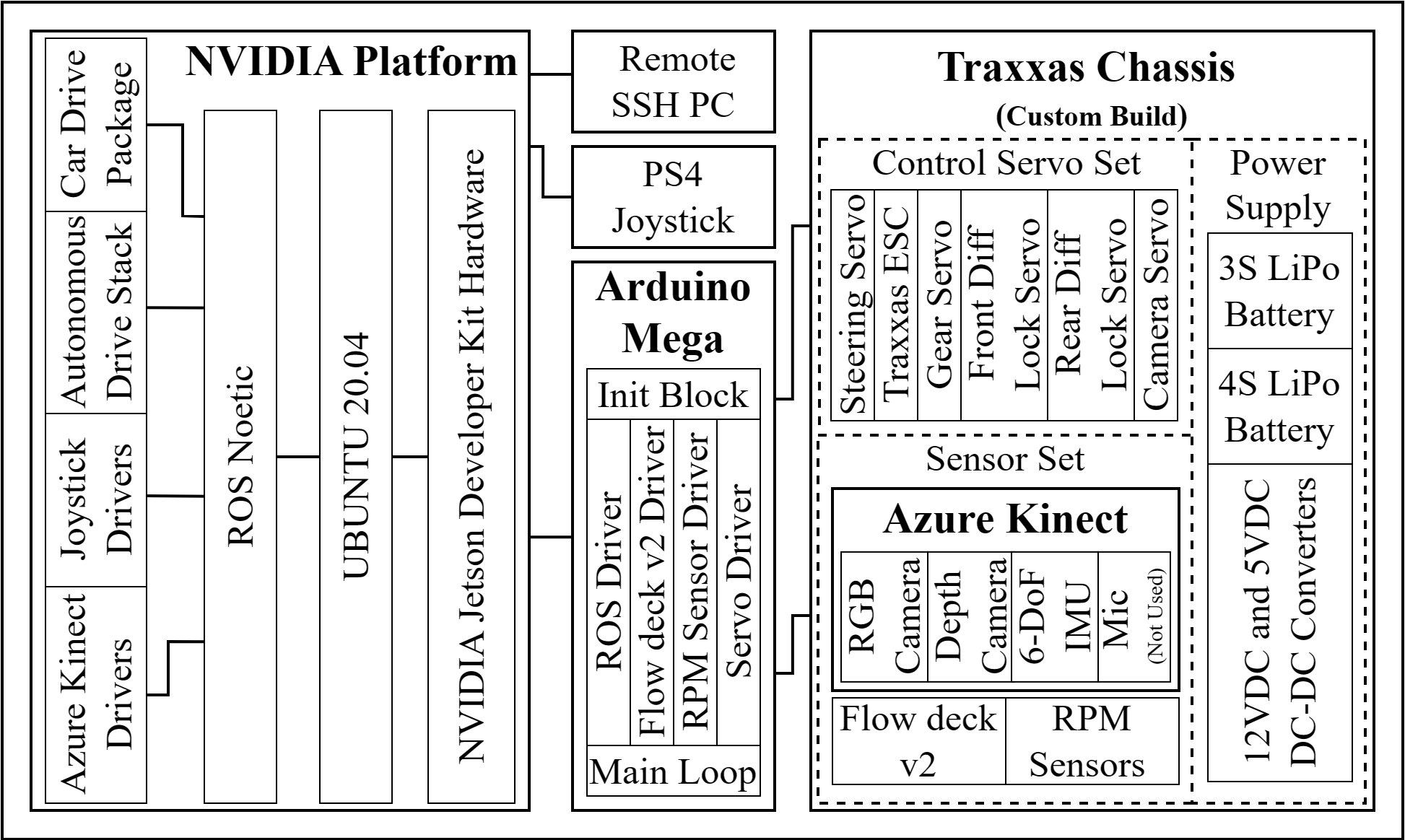

Verti-6-Wheeler (V6W)

For the mechanical components in D1 to D4, we base our platform on an off-the-shelf, three-axle, six-wheel, all- wheel-drive, off-road vehicle chassis from Traxxas. The length of the chassis is 0.863m, with 0.471m front-to-middle and 0.603m front-to-rear axle wheelbase. The total height and width of V6W after outfitting all mechanical and electrical components is 0.200m and 0.249m. D1 and D2 are therefore achieved. We use an Arduino Mega micro-controller to lock/unlock the front and rear differential (D3) and switch between low and high gear (D4) through three servos. For D5, we install four magnetic sensors on the front and middle axles, and six magnets per wheel to sense the wheel rotation. We choose not to install magnetic sensors on the rear axle considering the rear differential lock is shared by both middle and rear axle. For D6, we install a Crazyflie Flow deck v2 sensor on the chassis facing downward, providing not only 2D ground speed (x and y) but also distance between the sensor and the ground (z). We choose an Azure Kinect RGB-D camera due to its high-resolution depth perception at close range. For D7, we add a tilt joint for the camera actuated by a servo. Complementary filter has been chosen to estimate the camera position and PID controller is used for regulate camera pitch angle. For the core unit, we use an NVIDIA Jetson AGX Orin to provide both onboard CPU and GPU computation.

Verti-4-Wheeler (V4W)

Considering that most wheeled robots have four wheels, we also build a four-wheeled platform based on an off-the-self, two axle, four-wheel-drive, off-road vehicle from Traxxas. The length of the V4W chassis is 0.523m, with a 0.312m wheelbase. The total height and width of V4W is 0.200m and 0.249m. D1 and D2 are therefore achieved. Most components remain the same as the six-wheeler, but to accommodate the small footprint and payload capacity of the four-wheeler, we replacethe NVIDIA Jetson AGX Orin with a Xavier NX.

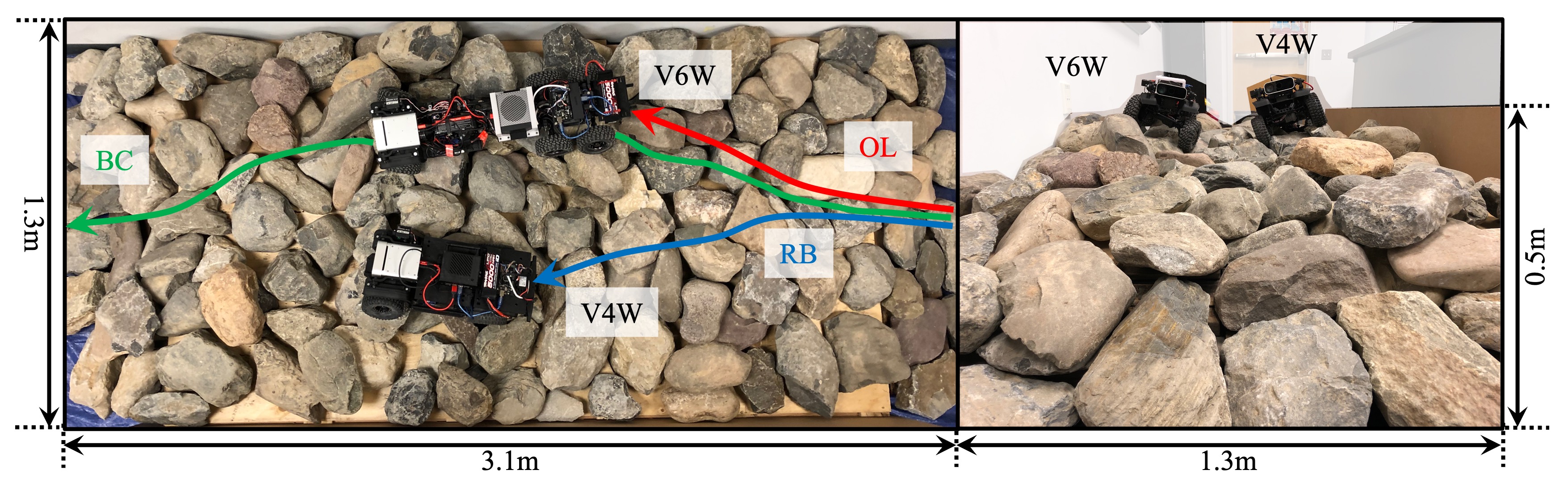

Testbed

In addition to outdoor field experiments, we construct a custom-built indoor testbed for vertically challenging terrain for controlled and repeatable experiments: hundreds of rocks and boulders of an average size of 30cm (at the same scale of the V6W and V4W) are randomly laid out and stacked up on a 3.1X1.3m test course. The highest elevation of the test course can reach up to 0.5m, more than twice the height of both vehicles. The testbed is highly reconfigurable by shuffling the pose of each rock/boulder.

Datasets

Considering the difficulty in representing surface topography and modeling complex vehicle dynamics and the recent success in data-driven mobility \cite{xiao2022motion}, we collect two datasets with the two wheeled robots on our custom-build testbed. We reconfigure our testbed multiple times and both robots are manually driven through different vertically challenging terrain. We collect the following data streams from the onboard sensors and human teleoperation commands: RGB ($1280\times720\times3$) and depth ($512\times512$) images, wheel speed $w$ (4D float vector for four wheels), ground speed $g$ (relative movement indicator along $\Delta x$ and $\Delta y$ and displacement along $z$, along with two binary reliability indicators for speeds and displacement), differential release/lock $d$ (2D binary vector for both front and rear differentials), low/high gear switch $s$ (1D binary vector), linear velocity $v$ (scalar float number), and steering angle $\omega$ (scalar float number). Our dataset $\mathcal{D}$ is therefore $ \mathcal{D} = \{ i_t, w_t, g_t, d_t, s_t, v_t, \omega_t \}_{t=1}^N $, where $N$ indicates the total number of data frames.

The V6W and V4W datasets include 50 and 64 teleoperated trials of 46667 and 70143 data frames of the 6-wheeler and 4-wheeler crawling over different vertically challenging rock/boulder courses respectively. To assure the human demonstrator has only access to the same perception as the robot, teleoperation is conducted in a first-person-view from the onboard camera, rather than a third-person-view.

With a more mechanically capable chassis and two more wheels, the demonstrator can demonstrate crawling behaviors on V6W at ease, i.e., mostly driving forward, slowing down when approaching an elevated terrain patch, and only using the steering to circumvent very difficult obstacle or ditch in front of V6W. On the other hand, the demonstration of V4W takes much more effort, and the demonstrator needs to carefully control both the linear velocity and steering angle at fine resolution to negotiate through a variety of vertically challenging terrain.

Both V4W and V6W datasets are published at George Mason University Dataverse.

Algorithms

Open-Loop Controller (OL)

As a baseline, we implement an open-loop controller that drives the robots toward vertically challenging terrain previously deemed as non-traversal obstacles, simply treating them as free spaces. Our open-loop controller locks the differentials and uses the low gear all the time. We set a constant linear velocity to drive the robot forward. No onboard perception is used for the open-loop controller.

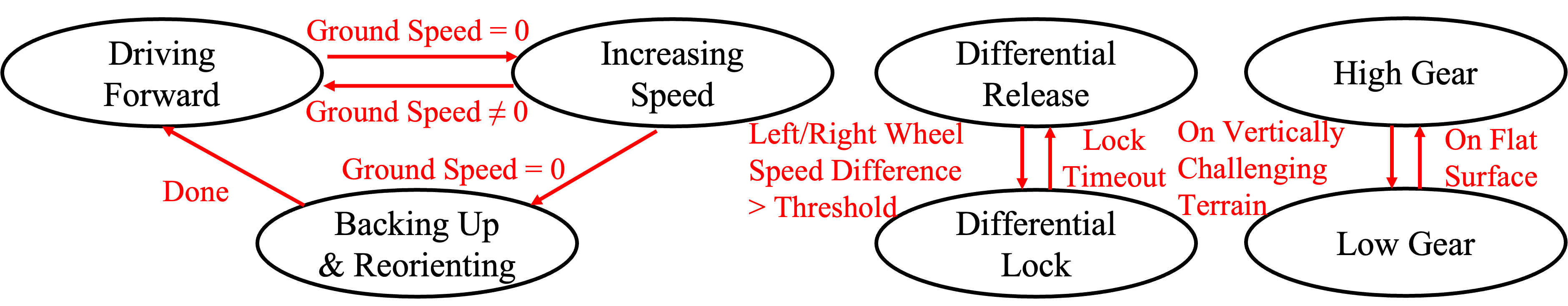

Rule-Based Controller (RB)

We design a classical rule-based controller based on our heuristics on off-road driving: we lock the corresponding differential when we sense wheel slippage; we use the low gear when ascending steep slopes; when getting stuck on rugged terrain, we first increase the wheel speed and attempt to move the robot forward beyond the stuck point; if unsuccessful, we then back up the robot to get unstuck, and subsequently try a slightly different route. With the hardware described in Sec. III, our robots are able to perceive all aforementioned information with the onboard sensors and initiate corresponding actions. The finte state machine of the rule-based controller is shown below.

End-to-End Learning Controller (BC)

We also develop an end-to-end learning-based controller to enable data-driven mobility. We aim at learning a motion policy that maps from the robots’ onboard perception to raw motor commands to drive the robots over vertically challenging terrain. Utilizing the datasets we collect, we adopt an imitation learning approach, i.e., Behavior Cloning, to regress from perceived vehicle state information to demonstrated actions.

Gallery

Links

Contact

For questions, please contact:

Dr. Xuesu Xiao

Department of Computer Science

George Mason University

4400 University Drive MSN 4A5, Fairfax, VA 22030 USA

xiao@gmu.edu